With the explosion of data volumes all enterprises are capturing, new technological solutions, such as Vertica, offer a solution to non-expert users who need to analyze and monetize their Big Data. If you are a non-expert user, the Database Designer (DBD) module in Vertica can help you choose a physical database design that minimizes storage footprint while optimizing the performance of the input query workload. The DBD can recommend good physical designs as quickly as possible using minimal computing resources.

One key technique by which Vertica can improve performance is data-specific encoding and compression. The encoding optimization component automatically chooses the best techniques, saving the cost of very-scarce human experts to manually specify encodings. While very effective, this process can be very resource and time intensive, often requiring all of a cluster’s resources for many hours to produce an optimal design. However, this approach is often not cost effective because the less resource intense the optimization process is, the more it will be used and the more data people can analyze.

The Vertica Analytic Database was designed from the ground up to analyze massive amounts of data, using research from MIT, Brown, and Brandeis. At least three current Vertica customers have over 1 PB in their production databases. One of the key technologies to handle petabyte-scale data sets is sophisticated encoding and compression algorithms because they minimize storage and I/O bandwidth requirements. Vertica automatically chooses an optimal physical database design including encoding and compression based on schema, sample data, and query workload. The storage optimization process is extraordinarily accurate.

With storage optimization, the specific challenges to address are:

- Identifying appropriate encoding/compression methods for each column type based on cardinality, sortedness, or both.

- Finding the best encoding/compression method among multiple candidate choices that yields the least storage footprint without compromising retrieval performance.

- Identifying a representative data sample for storage experiments, which requires:

- Finding an appropriate sample size

- Identifying an appropriate sampling technique

Data Encoding: Encoding is the process of converting data into a standard format. In Vertica, encoded data can be processed directly, which distinguishes encoding from compression. Vertica uses a number of different encoding strategies, depending on column data type, table cardinality, and sort order. The query executor in Vertica operates on the encoded data representation whenever possible to avoid the cost of decoding. It also passes encoded values to other operations, saving memory bandwidth. In contrast, row stores and most other column stores typically decode data elements before performing any operation. The following table presents the different encoding types along with their descriptions, emphasis on their ideal column type.

Data Compression: Compression is the process of transforming encoded data into a compact format. Compressed data cannot be directly processed; it must first be decompressed. Vertica uses integer packing for unencoded integers and LZO for compressible data. When compression is used extensively, it allows a column store to occupy substantially less storage than a row store. In a column store, every value stored in a column has the same data type which enables more effective encoding and compression, particularly in sorted columns. In a row store, each row contains multiple columns with different data types, resulting in a much less effective use of compression.

Storage Optimization: The best encoding type for each column is the one that achieves the best compression (least storage footprint). The DBD tries all ideal encoding candidates for each column, based on the column type and properties to find this optimal encoding. You choose a data sample, and then the DBD compares the storage footprints of the encoding candidates, skipping inappropriate choices. For example, RLE encoding is not an ideal choice for a column identified as high cardinality through statistics or primary key constraints. Thus, the DBD won’t try the RLE encoding type r such columns, as the following table shows. Also, in most cases, Database Designer wont try a column with an unambiguous encoding choice unless it affects the storage footprint of other columns.

| Encoding types | Description | Ideal for |

| AUTO(default) | Automatically picks based on properties of the data itself, when insufficient examples are known. For string, Boolean and float types, Lempel-Ziv-Oberhumer based (LZO) compression is used. For integer-based types, the compression scheme is based on the delta between consecutive column values. CPU requirements are relatively small. | Sorted, high cardinality columns such as primary keys. Also suitable for general-purpose applications for which no other encoding or compression scheme is applicable. |

| RLE (Run-length encoding) | Replaces sequences (runs) of identical values with a single pair that contains the value and number of occurrences. The storage footprint for RLE and AUTO encoding of string types is the same. | Sorted, low-cardinality columns. Ideal when the run length is large, such as when low-cardinality columns are sorted. |

| BLOCK-DICT (Block Dictionary) | Within a block, distinct values are stored in a dictionary. A list of references to that dictionary represents the data block. CPU requirements are significantly higher than for default encoding schemes. | Unsorted, low-cardinality columns, such as stock prices. |

| BLOCKDICT-COMP (Compressed Block Dictionary) | Similar to BLOCK_DICT except that dictionary indexes are entropy coded. Requires significantly more CPU time to encode and decode. | Unsorted, low-cardinality columns with extremely skewed value distribution. |

| DELTAVAL (Delta Value) | Data is recorded as a difference from the smallest value in a data block. CPU requirements are minimal, and the data never expands. | Unsorted, high-cardinality integer or integer-based columns. |

| DELTARANGE-COMP(Compressed Delta Range) | Stores each value as a delta from the previous one. This scheme has a high cost for both compression and decompression. | High-cardinality float columns that are sorted or confined to a range. |

| COMMONDELTA-COMP(Compressed Common Delta) | Builds a dictionary of all the deltas in the block and then stores indexes into the delta dictionary using entropy coding. If the delta distribution is excellent, columns can be stored in less than one bit per row. CPU requirements are extensive. | Sorted columns with predictable sequences and only occasional sequence breaks. (For example, you could use this encoding type for timestamps recorded at periodic intervals or primary keys). |

| GCD-DELTA (Greatest Common Divisor Delta Value) | Data is recorded as the difference from the smallest value in the data block divided by the greatest common divisor (GCD) of all entries in the block. CPU requirements for decoding GCDDELTA are minimal and the data never expands, but may take more encoding time than DELTAVAL. | Many-valued unsorted integer or integer-based columns, when the values are a multiple of a common factor. (For example, timestamps are stored internally in microseconds, so data this is only precise to the millisecond are all multiples of 1000). |

Data Sampling for Storage Optimization: Implementing an appropriate sampling technique is critical for the quality of encoding/compression achieved. We experimented two different methods: (a) random sampling and (b) clustered sampling. Random sampling involves selecting a random set of rows for encoding tests. The main disadvantage is performance, as the random sample is selected by scanning every row and applying a random function.

We also noticed that using such a random sample is usually detrimental for certain encoding types. For example, with RLE, it tends to increase the apparent number of distinct values and propensity toward sequences. This increase negatively affects COMMONDELTA-COMP and a few others. To overcome these disadvantages, we implemented a clustered sampling technique. Clustered sampling selects contiguous rows from equally spaced bands in the database, based on a preselected sample size and band count. This approach improves performance because Vertica reads only the required amount of data. It also improves quality of sample appropriate for encoding experiments.

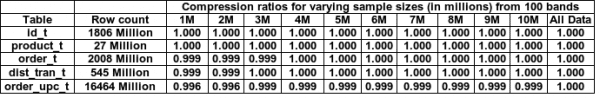

Determining an appropriate Sample Size for Storage Optimization: Selecting an appropriate sample size is critical for both performance and quality of the encoding/compression achieved. To decide on an appropriate sample size, we experimented with six real customer data sets. We tried different sample sizes in million increments starting from 1 million to 10 million samples. We then measured the compression ratio achieved, as explained by this equation:

Compression ratio = Storage Footprint (in bytes) with optimal encodings (selected using all available data)

Storage Footprint (in bytes) with approximate encodings (selected using a data sample)

The compression ratio cannot be more than 1.0 and the closer it is to 1.0, the better. We found that one million clustered samples are sufficient to achieve a compression ratio of 99.6%. We also noticed that 1 million samples are enough to find the best encodings in most columns. Other columns, however, might end up having the second or third best encoding.

Summary: The Database Designer module of Vertica Analytic Database automatically recommends physical designs that optimize query performance, storage footprint, fault tolerance and recovery to meet different customer scenarios and applications. In this blog, we focused on how big data storage optimization is being done using the Database Designer component of Vertica.